Methodology

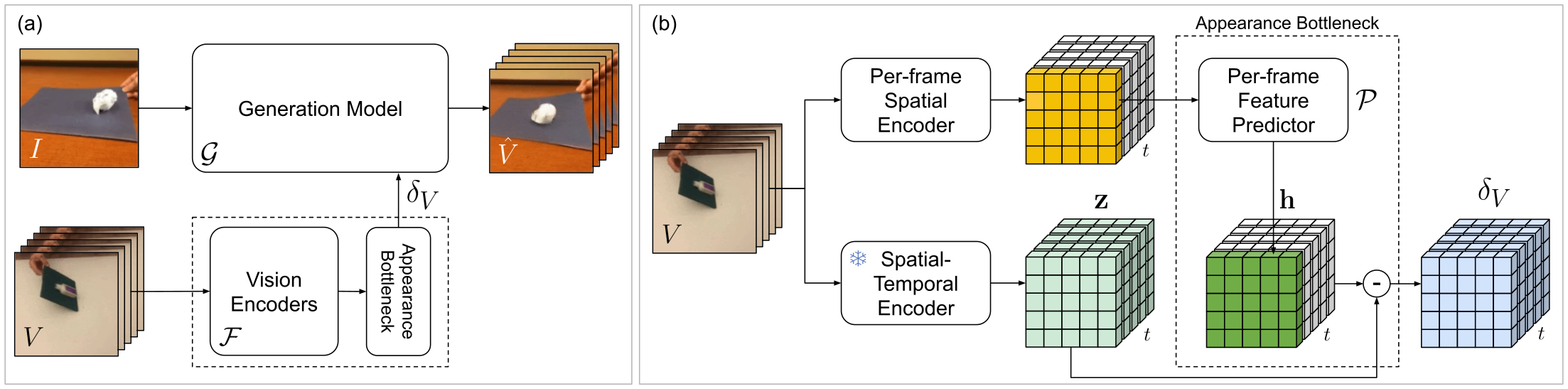

(a) Overview of \(\delta\)-Diffusion. The initial frame \(I\) is provided to the generation model \(\mathcal{G}\) along with the action latents \(\delta_V\) extracted from the demonstration video \(V\). The output \(\hat{V}\) continues naturally from \(I\) and carries out the actions in \(V\). During training, the target \(\hat{V}\) is the same as the demonstration \(V\). (b) Extracting action latents. First, a video encoder extracts the spatiotemporal representations \(\mathbf{z}^{ST}\) from demonstration \(V\), with \(t\) denoting the temporal dimension. In parallel, an image encoder extracts per-frame spatial representations \(\mathbf{z}^{S}\) from \(V\), which are aligned to \(\mathbf{z}^{ST}\) by feature predictor \(\mathcal{P}\). The appearance bottleneck then computes the action latents \(\delta_V\) by subtracting the aligned spatial representations \(\mathcal{P}(\mathbf{z}^S_t)\) from the spatiotemporal representations \(\mathbf{z}^{ST}_t\) for each frame \(V_t\).